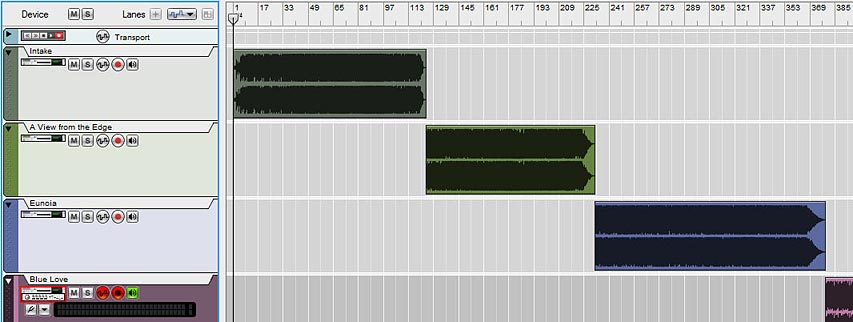

In a previous tutorial I spoke about how you can create frequency-based FX and divide your FX, sending different delays or phasers or any combination of FX to different frequencies in your mix. This time we’re going to send those same FX to different locations in your mix: Front, Back, Left and Right. This way, we’ll create different FX for 4 different corners of your mix.

The tutorial files can be downloaded here: 4-corner-spatial-fx This zip file contains 2 Combinators: 4-corner delay FX and 4-corner phaser FX.

Starting of creating the Front and Back sections

First, the video:

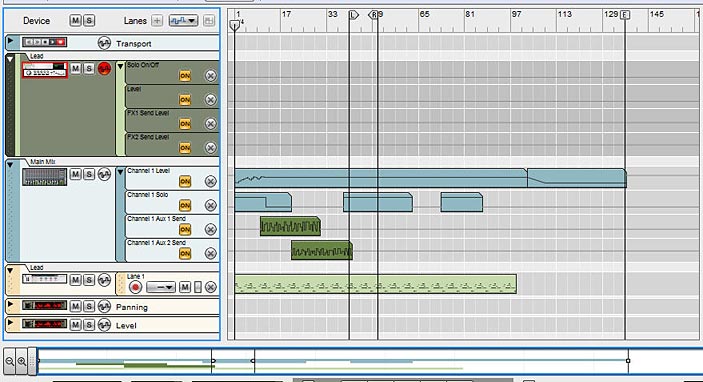

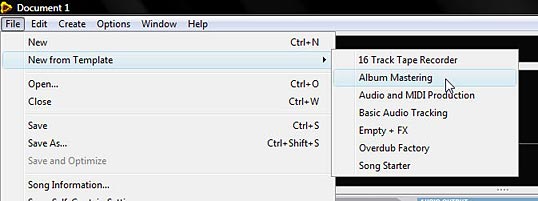

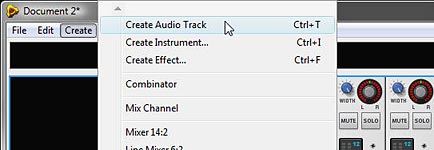

- First, we’ll start in Reason, and start by opening a new document with all the usual suspects. Create a main mixer, and a sound source (an initialized Thor would do just fine).

- Next, create a Combinator under the sound source. Inside the Combinator, hold down shift and create a Unison device (UN-16), Audio Merger/Splitter, 6:2 Line Mixer, Stereo Imager, RV7000, and for our FX device, let’s create a Phaser (PH-90).

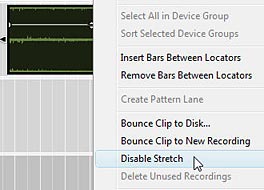

- Now holding shift down, select the Stereo Imager, RV7000, and PH-90 Phaser, then right click and select “Duplicate Devices and Tracks.”

- Routing time (note that all the audio routings we’re going to create here are in Stereo pairs): Flip the rack around, and move the Thor Audio outputs into the Combinator Audio inputs. Send the Combinator outputs to Channel 1 on the main mixer. Send the Combinator To Devices outputs into the Unison inputs. Then send the Unison outputs to the Audio Splitter inputs. Send 1 split into the first Stereo Imager’s inputs (we’ll call this the Front Imager), and the second split into the second Stereo Imager’s inputs (we’ll call this the Back Imager).

- Continuing with our routing, send the Imager outputs to the RV7000 Inputs (do this for both front and back imagers). Then send the RV7000 outputs to the Phaser inputs (both front and back). Then send the front and back Phaser outputs to Channels 1 and 3 on the 6:2 line mixer. Finally, send the Mixer’s master output to the “From Devices” inputs on the Combinator.

The Routings on the back of the rack. Looks complicated, but it's really pretty straightforward. - Flip the rack around to the front. Now it’s time to set up some parameters. On the Front Imager, send both the Lo and Hi bands fully Mono (fully left). On the Back Stereo Imager, send both the Lo and Hi bands fully Wide (fully right).

- Open up the Remote Programmer on both the front and back RV7000 Reverbs. The Hall algorithms are the default and these are fine for now. On the front Reverb, reduce the size fully (to 13.2 m) and reduce the Global decay to around 50. Increase the HF Dampening to around 84. On the back Reverb increase the size fully (to 39.6 m) and increase the decay to around 98. Also leave the default HF Dampening at around 28. Finally, decrease the Dry/Wet knob on both reverbs to around 30-40 or thereabouts.

- Open up the Combinator’s Programmer, select the 6:2 Line Mixer and enter these settings:

Rotary 1 > Channel 1 Level: 0/85

Rotary 3 > Channel 3 Level: 0/85

Now, the First Rotary controls the Front Mix, and the third Rotary controls the back mix. If you play your sound source through this FX Combinator, you’ll hear the front and back sounds by adjusting the Rotaries. But what makes things more interesting is if you apply different settings to your two Phaser devices. Even some subtle changes to the Frequency and Width parameters can provide a much more rich soundscape which makes even Thor’s initialized patch sound pretty interesting.

You can also leave things as they are, or you can move on and create two more spatial corners in our mix by adding both Left and Right panning. In this way, you create a 4-Corner FX split for Front Left, Front Right, Back Left, and Back Right.

Moving from side to side

Now, for the second part in the Video Series:

So let’s continue on our journey and create a split for left and right.

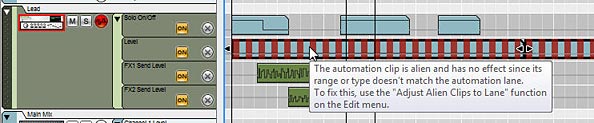

- First thing we’ll have to do is hold the shift key down and create two other phasers; one next to the front phaser and another next to the back phaser. Then select the front RV7000 and holding shift down, create a Spider Audio Merger/Splitter. Do the same for the back by holding down the back RV7000 and creating another Spider Audio Merger/Splitter.

- Flip to the back of the rack and let’s set up some new routings. Move the cables from the inputs on both Phasers and move these cables to their respective Spider Splitters (in the main Split). Then send one split to the Front Phaser 1 (let’s call this left) and send another split to the Front Phaser 2 (let’s call this right). Then send the outputs from the two new phasers to Channel 3 and 4 respectively.

- Flip to the front of the rack and on the 6:2 Line Mixer set the panning for Channels 1 and 3 to about -22 (left) and Channels 2 and 4 to +22 (right). How far left or right you set the panning is really a matter of taste. With this all set up, the 6:2 Line Mixer will be set up as follows:

Channel 1: Front Left Phaser

Channel 2: Front Right Phaser

Channel 3: Back Left Phaser

Channel 4: Back Right Phaser

- Open up the Combinator Programmer, and assign the 6:2 Line Mixer Channel 2 and Channel 4 to Rotary 2 and 4 respectively as follows:

Rotary 2 > Channel 2 Level: 0/85

Rotary 4 > Channel 4 Level: 0/85

- Now you can provide labels for all 4 rotaries as follows:

Rotary 1: Front Left

Rotary 2: Front Right

Rotary 3: Back Left

Rotary 4: Back Right

And there you have it. A 4-corner mix with different FX for each corner. You don’t have to restrict yourself to Phasers. With some ingenuity you can assign any FX to any location, or any combination of FX to any of these 4 locations, and all of those with different parameters too. The only thing left is to adjust the Phasers to have different settings as you see fit.

Here’s a video showing you some of the things you can do to modulate the Phasers:

A few other notes:

- The reason we set up a Unison device in front of the mix is because this ensures that the signal sent into both the imagers is in Stereo. This is needed for the Stereo Imager to function as it should. It won’t work with a Mono signal. It means that even if you use a Subtractor, for example (which is mono), it can still be sent into the Imagers and the Imagers can work their magic.

- Using the Width / Mono setting on the Imager bands helps to create the illusion of front and back audio locations. Used in conjunction with the Reverbs, you can create some sophisticated positioning not only with your FX, but also audio of any kind. When you move towards Mono, the sound appears to come from the front of the mix. By widening the bands, the sound becomes more spread out and appears to come from the back.

- Just as with the Imagers, changing the space size and decays on the Reverbs helps the illusion along. Smaller sizes and shorter decays means a tighter reverb space which appears as though the sound is closer. For the back Reverb, the opposite is in effect. By creating a wider space with a longer tail reverb, you end up with a sound that is pressed further back. Keeping the same algorithm type still binds the two reverb spaces together. However, there’s nothing preventing you from trying to use different algorithms altogether (for example, a Small Space reverb for the front and an Arena reverb for the back).

- Ever look at those Escher drawings where the staircases keep looping back into themselves? They are impossible pictures. Well, the same can be achieved with sound. You can create some really weird effects by creating an impossible space. Try switching the Reverbs around but keeping the Imagers as they are. The Imagers will tell your ears that the sound should be coming from the front and back, but the reverbs will be telling you the reverse. It can be a disturbing effect. But in the virtual world, you can create these “Impossible” sounds easily. Try that one out.

As always I’d love to hear what you think? Show some love and drop me some feedback or any questions you might have. Until next time, good luck in all your musical endeavors.